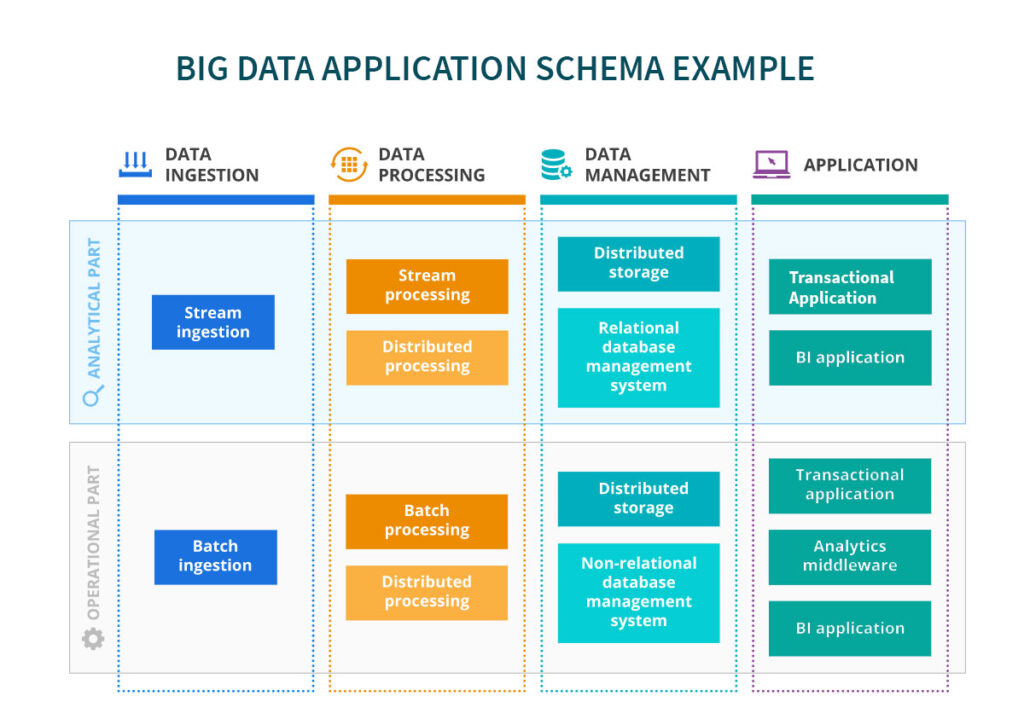

Testing of a big data application combining operational and analytical parts comprises the validation of the app’s correct and smooth functioning (including testing event streaming and analytics workflows, a DWH and a non-relational database, the app’s complex integrations), its availability, response time, optimal resources consumption, data integrity and security.

A big data application comprising operational and analytical parts requires thorough functional testing on the API level. Initially, the functionality of each big data app component should be validated in isolation.

For example, if your big data operational solution has an event-driven architecture, a test engineer sends test input events to each component of the solution (e.g., a data streaming tool, an event library, a stream processing framework, etc.) validating its output and behavior against requirements. Then, end-to-end functional testing should ensure the flawless functioning of the entire application.

For example, if your analytics solution comprises technologies from the Hadoop family (like HDFS, YARN, Map Reduce, and other applicable tools), a test engineer checks the seamless communication between them and their elements (e.g., HDFS Name Node and Data Nodes, YARN Resource Manager and Node Manager).

To ensure the big data application’s stable performance, performance test engineers should:

Run tests validating the core big data application functionality under load.

To ensure the security of large volumes of highly sensitive data, a security test engineer should:

At a higher level of security provisioning, cyber security professionals perform an application and network security scanning and penetration testing.

Test engineers check, whether the big data warehouse perceives SQL queries correctly, and validate the business rules and transformation logic within DWH columns and rows.

BI testing, as a part of DWH testing, helps ensure data integrity within an online analytical processing (OLAP) cube and smooth functioning of OLAP operations (e.g., roll-up, drill-down, slicing and dicing, pivot)

Non-relational database testing

Test engineers check how the database handles queries. Besides, it’s advisable to verify database configuration parameters that may demine the application’s performance, and data backup and restore process.

With big data applications, it’s pointless and unfeasible to strive for complete data consistency, accuracy, audit ability, orderliness, and particularly uniqueness (data is replicated by a number of big data app’s components for the sake of fault-tolerance). Still, big data test and data engineers should check if your big data is good enough quality on these potentially problematic levels:

The steps of a big data testing setup plan and their sequence may significantly differ depending on your business processes, the big data app’s specific requirements and architecture. Based on our ample experience in rendering software testing and QA services, we list some common high-level stages.

Assign a QA manager to ensure your big data app’s requirements specification is designed in a testable way. Each big data application requirement should be clear, measurable, and complete; functional requirements can be designed in the form of user stories.

Also, the QA manager designs a KPI suite, including such software testing KPIs as the number of test cases created and run per iteration, the number of defects found per iteration, the number of rejected defects, overall test coverage, defect leakage, and more. Besides, a risk mitigation plan should be created to address possible quality risks in big data application testing.

At this stage, you should outline scenarios and schedules for the communication between the development and testing teams. Thus, test engineers will have a sufficient understanding of the big data app’s schema, which is essential for testing granularity and risk-based testing.

Finally, a QA manager decides on a relevant sourcing model for the big data application testing.

Preparation for the big data testing process will differ based on the sourcing model you opt for: in-house testing or outsourced testing.

If you opt for in-house big data app testing, your QA manager outlines a big data testing approach, creates a big data application test strategy and plan, estimates required efforts, arranges training for test engineers and recruits additional QA talents.

If you lack in-house QA resources to perform big data application testing, you can turn to outsourcing. To choose a reliable vendor, you should:

During big data application testing, your QA manager should regularly measure the outsourced big data testing progress against the outlined KPIs and mitigate possible communication issues.

Big data app testing can be launched when a test environment and test data management system is set up.

Big data applications’ size makes it unfeasible to replicate an app completely in the test environment. Still, with the test environment not fully replicating the production mode, make sure it provides high capacity distributed storage to run tests at different scale and granularity levels.

The QA manager should design a robust test data architecture and management system that can be easily handled by all teams’ members, will provide clear test data classification, quick scalability options, and flexible test data structure.

Outsourced big data app testing

© 2022 | All Rights Reserved